实验环境

# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.3 (Maipo)

单节点主要是为了测试使用,生产环境中一般都是3个以上的节点,OSD也是使用的独立磁盘。 本实验采用本地文件分区作为OSD,而非传统的独立磁盘作为OSD,Monitor,RGW节点数量为1个

实验准备

1、创建文件分区

使用独立磁盘创建9个10G大小的分区

# for i in 1 2 3 4 5 6 7 8 9 ; do sgdisk -n $i:0:+10G /dev/sdb ; done

2、创建yum源

这里使用的是阿里的yum源,同时去掉yum源中的aliyuncs的内网源,修改源的版本号为指定的版本,可以加速yum源的效率

# rm -rf /etc/yum.repos.d/*

# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

# sed -i '/aliyuncs/d' /etc/yum.repos.d/CentOS-Base.repo

# sed -i '/aliyuncs/d' /etc/yum.repos.d/epel.repo

# sed -i 's/$releasever/7/g' /etc/yum.repos.d/CentOS-Base.repo

创建ceph源

# cat /etc/yum.repos.d/ceph.repo

[ceph]

name=ceph

baseurl=https://mirrors.aliyun.com/ceph/rpm-jewel/el7/x86_64/

gpgcheck=0

[ceph-noarch]

name=cephnoarch

baseurl=https://mirrors.aliyun.com/ceph/rpm-jewel/el7/noarch/

gpgcheck=0

3、配置节点秘钥登陆

# ssh-keygen

# ssh-copy-id -i node1

4、安装ceph-deploy,ceph,ceph-radosgw软件包

# yum -y install ceph-deploy ceph ceph-radosgw

这里其实只需要在deploy节点上安装ceph-deploy软件包即可,ceph,ceph-radosgw在配置ceph过程中会自动安装,在这里进行安装是为了确认一下yum源是否可以正常把软件包安装上

5、确认软件包版本

# ceph-deploy --version

1.5.39

# ceph -v

ceph version 10.2.11 (e4b061b47f07f583c92a050d9e84b1813a35671e)

部署ceph

1、创建部署目录并开始部署

# mkdir cluster/

# cd cluster/

# ceph-deploy new node1

在执行ceph-deploy new node1命令时,如果没有完成前面的节点秘钥登陆配置,这里需要手动输入节点密码,命令执行完成后,cluster目录下会生成以下文件

# ls

ceph.conf ceph-deploy-ceph.log ceph.mon.keyring

2、在配置文件中添加public_network配置项

# echo public_network=192.168.3.0/24 >> ceph.conf

完整的配置文件内容如下

# cat ceph.conf

[global]

fsid = 3696fe22-36ed-4b3b-ba4e-de873e4b1455

mon_initial_members = node1

mon_host = 192.168.3.100

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public_network = 192.168.3.0/24

3、部署Monitor

# ceph-deploy mon create-initial

4、部署OSD

# ceph-deploy --overwrite-conf osd prepare node1:/dev/sdb{1,2,3,4,5,6,7,8,9}

# ceph-deploy --overwrite-conf osd activate node1:/dev/sdb{1,2,3,4,5,6,7,8,9}

5、调整crushmap

查看当前的容灾域是host,副本数是3

# ceph osd crush rule dump

[

{

"rule_id": 0,

"rule_name": "replicated_ruleset",

"ruleset": 0,

"type": 1,

"min_size": 1,

"max_size": 10,

"steps": [

{

"op": "take",

"item": -1,

"item_name": "default"

},

{

"op": "chooseleaf_firstn",

"num": 0,

"type": "host"

},

{

"op": "emit"

}

]

}

]

# ceph osd pool get rbd size

size: 3

由于是单节点,要想实现3副本的效果,需要手动创建假的host,并把新添加的host移动到root下

# ceph osd crush add-bucket node2 host

# ceph osd crush add-bucket node3 host

# ceph osd crush move node2 root=default

# ceph osd crush move node3 root=default

把9个OSD平均分配到3个host中

# for i in 1 4 7 ; do ceph osd crush move osd.$i host=node2 ; done

# for i in 2 5 8 ; do ceph osd crush move osd.$i host=node3 ; done

调整之后的OSD分布如下

# ceph osd tree

ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY

-1 0.08817 root default

-2 0.02939 host node1

0 0.00980 osd.0 up 1.00000 1.00000

3 0.00980 osd.3 up 1.00000 1.00000

6 0.00980 osd.6 up 1.00000 1.00000

-3 0.02939 host node2

1 0.00980 osd.1 up 1.00000 1.00000

4 0.00980 osd.4 up 1.00000 1.00000

7 0.00980 osd.7 up 1.00000 1.00000

-4 0.02939 host node3

2 0.00980 osd.2 up 1.00000 1.00000

5 0.00980 osd.5 up 1.00000 1.00000

8 0.00980 osd.8 up 1.00000 1.00000

6、部署rgw

# cd cluster/

# ceph-deploy rgw create node1

7、查看集群状态

# ceph -s

cluster 3696fe22-36ed-4b3b-ba4e-de873e4b1455

health HEALTH_OK

monmap e1: 1 mons at {node1=192.168.3.100:6789/0}

election epoch 3, quorum 0 node1

osdmap e68: 9 osds: 9 up, 9 in

flags sortbitwise,require_jewel_osds

pgmap v187: 104 pgs, 6 pools, 1588 bytes data, 171 objects

47055 MB used, 45014 MB / 92070 MB avail

104 active+clean

根据集群,部署完成后如果有too few PGs per OSD的告警信息,需要手动调整pg_num和pgp_num 大小一般为:100*osd数量 / 副本数 /pool数量 然后取与对应值最接近的2的幂数

配置s3cmd验证s3 browser

1、安装s3cmd

# yum -y install s3cmd

2、创建s3用户

# radosgw-admin user create --uid=admin --access-key=123456 --secret-key=123456 --display-name=admin

{

"user_id": "admin",

"display_name": "admin",

"email": "",

"suspended": 0,

"max_buckets": 1000,

"auid": 0,

"subusers": [],

"keys": [

{

"user": "admin",

"access_key": "123456",

"secret_key": "123456"

}

],

"swift_keys": [],

"caps": [],

"op_mask": "read, write, delete",

"default_placement": "",

"placement_tags": [],

"bucket_quota": {

"enabled": false,

"max_size_kb": -1,

"max_objects": -1

},

"user_quota": {

"enabled": false,

"max_size_kb": -1,

"max_objects": -1

},

"temp_url_keys": []

}

3、生成s3配置文件

在root目录下创建.s3cfg文件,写入如下内容

# vim .s3cfg

[default]

access_key = 123456

bucket_location = US

cloudfront_host = 192.168.3.100:7480

cloudfront_resource = /2010-07-15/distribution

default_mime_type = binary/octet-stream

delete_removed = False

dry_run = False

encoding = UTF-8

encrypt = False

follow_symlinks = False

force = False

get_continue = False

gpg_command = /usr/bin/gpg

gpg_decrypt = %(gpg_command)s -d --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s

gpg_encrypt = %(gpg_command)s -c --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s

gpg_passphrase =

guess_mime_type = True

host_base = 192.168.3.100:7480

host_bucket = 192.168.3.100:7480/%(bucket)

human_readable_sizes = False

list_md5 = False

log_target_prefix =

preserve_attrs = True

progress_meter = True

proxy_host =

proxy_port = 0

recursive = False

recv_chunk = 4096

reduced_redundancy = False

secret_key = 123456

send_chunk = 96

simpledb_host = sdb.amazonaws.com

skip_existing = False

socket_timeout = 300

urlencoding_mode = normal

use_https = False

verbosity = WARNING

signature_v2 = True

4、创建bucket

# s3cmd mb s3://test

Bucket 's3://test/' created

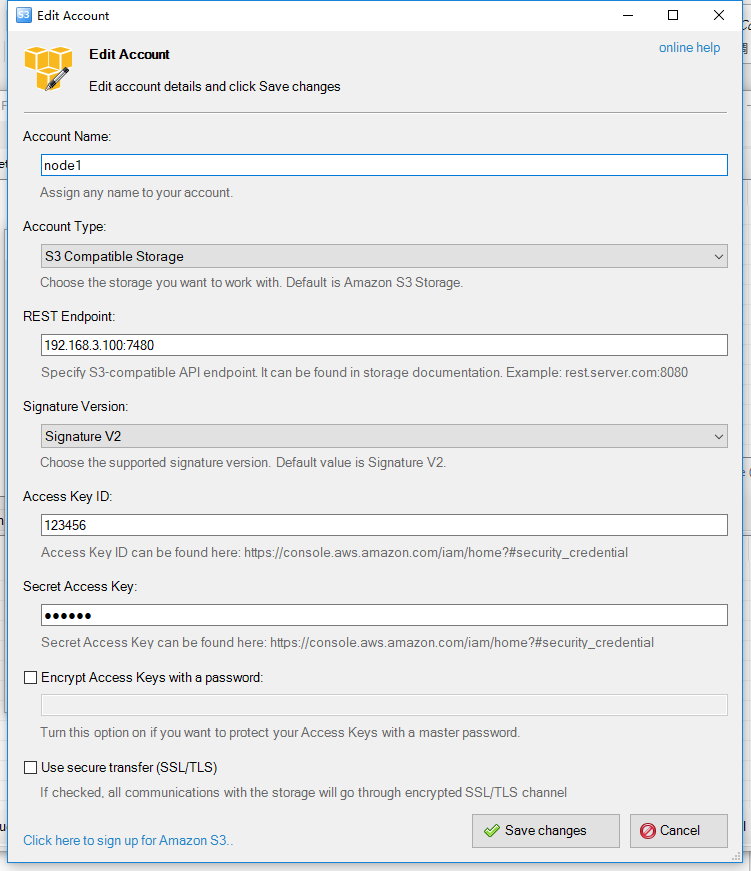

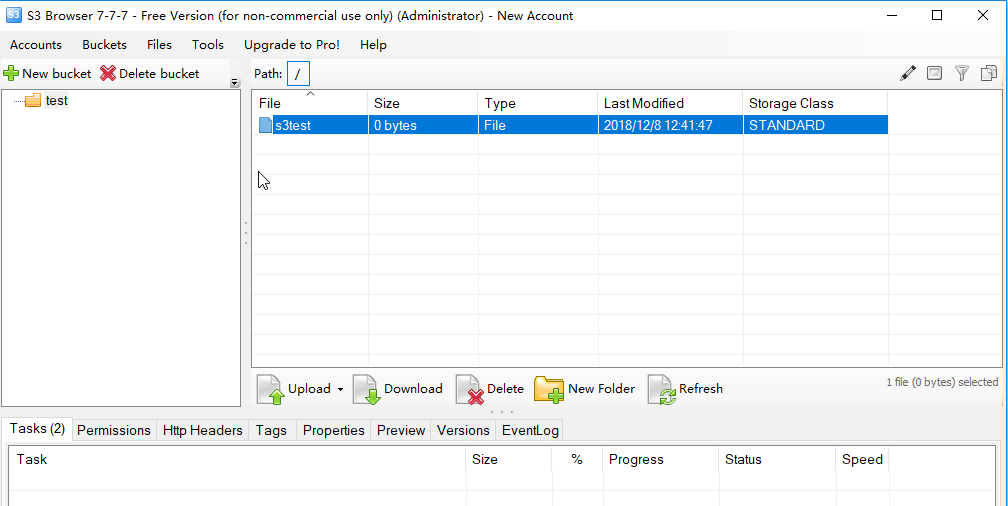

5、配置s3 browser并上传测试文件

6、s3cmd查看上传的文件

# s3cmd ls s3://test

2018-12-08 04:38 0 s3://test/s3test