环境配置

1、设备列表

| IP | 主机名 | 功能 |

|---|---|---|

| 192.168.1.10 | mon1 | mon,osd,rgw |

| 192.168.1.11 | mon2 | mon,osd,rgw |

| 192.168.1.12 | mon3 | mon,osd,rgw |

2、配置yum源

使用的是阿里的yum源,同时去掉yum源中的aliyuncs的内网源,修改源的版本号为指定的版本,可以提高yum源的效率,以下操作需要在每个节点上执行。

$ rm -rf /etc/yum.repos.d/*$ wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo$ wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo$ sed -i '/aliyuncs/d' /etc/yum.repos.d/CentOS-Base.repo$ sed -i '/aliyuncs/d' /etc/yum.repos.d/epel.repo$ sed -i 's/$releasever/7/g' /etc/yum.repos.d/CentOS-Base.repo

配置Ceph源,这里使用Luminous版的Ceph源

$ vim /etc/yum.repos.d/ceph-luminous.repo[ceph]name=x86_64baseurl=https://mirrors.aliyun.com/ceph/rpm-luminous/el7/x86_64/gpgcheck=0[ceph-noarch]name=noarchbaseurl=https://mirrors.aliyun.com/ceph/rpm-luminous/el7/noarch/gpgcheck=0[ceph-arrch64]name=arrch64baseurl=https://mirrors.aliyun.com/ceph/rpm-luminous/el7/aarch64/gpgcheck=0[ceph-SRPMS]name=SRPMSbaseurl=https://mirrors.aliyun.com/ceph/rpm-luminous/el7/SRPMS/gpgcheck=0

Monitor部署

安装软件包

在每个Monitor节点上使用yum安装ceph

$ yum -y install ceph

部署第一个Monitor节点

1、登录到mon1节点,查看ceph目录是否已经生成

$ ls /etc/ceph/rbdmap

2、生成ceph集群的uuid

$ uuidgenb6f87f4d-c8f7-48c0-892e-71bc16cce7ff

3、生成ceph集群配置文件,并写入如下内容

$ vim /etc/ceph/ceph.conf[global]fsid = b6f87f4d-c8f7-48c0-892e-71bc16cce7ffmon initial members = mon1mon host = 192.168.1.10public network = 192.168.1.0/24cluster network = 192.168.1.0/24

4、生成key

$ ceph-authtool --create-keyring /tmp/ceph.mon.keyring --gen-key -n mon. --cap mon 'allow *'

5、创建管理用户key,并添加权限

$ sudo ceph-authtool --create-keyring /etc/ceph/ceph.client.admin.keyring --gen-key -n client.admin \--set-uid=0 --cap mon 'allow *' --cap osd 'allow *' --cap mgr 'allow *' --cap rgw 'allow *'

6、生成osd引导key

$ sudo ceph-authtool --create-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring --gen-key -n client.bootstrap-osd \--cap mon 'profile bootstrap-osd'

7、把生成的密钥添加到ceph.mon.keyring

$ sudo ceph-authtool /tmp/ceph.mon.keyring --import-keyring /etc/ceph/ceph.client.admin.keyring$ sudo ceph-authtool /tmp/ceph.mon.keyring --import-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring

8、生成monmap

$ monmaptool --create --add mon1 192.168.1.10 --fsid b6f87f4d-c8f7-48c0-892e-71bc16cce7ff /tmp/monmap

9、创建数据目录

$ sudo -u ceph mkdir /var/lib/ceph/mon/ceph-mon1

10、修改ceph.mon.keyring文件权限

$ chown ceph.ceph /tmp/ceph.mon.keyring

11、初始化Monitor

$ sudo -u ceph ceph-mon --mkfs -i mon1 --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring

12、启动Monitor

$ systemctl status ceph-mon@mon1$ systemctl status ceph-mon@mon1● ceph-mon@mon1.service - Ceph cluster monitor daemonLoaded: loaded (/usr/lib/systemd/system/ceph-mon@.service; disabled; vendor preset: disabled)Active: active (running) since Mon 2019-03-04 11:19:47 CST; 50s agoMain PID: 1462 (ceph-mon)

13、设置Monitor开机自启

$ systemctl enable ceph-mon@mon1

部署其他Monitor节点

以下以mon2节点为例 1、拷贝配置文件

$ scp /etc/ceph/* mon2:/etc/ceph/$ scp /var/lib/ceph/bootstrap-osd/ceph.keyring mon2:/var/lib/ceph/bootstrap-osd/$ scp /tmp/ceph.mon.keyring mon2:/tmp/ceph.mon.keyring

2、在mon2节点上创建数据目录

$ sudo -u ceph mkdir /var/lib/ceph/mon/ceph-mon2

3、修改ceph.mon.keyring文件权限

$ chown ceph.ceph /tmp/ceph.mon.keyring

4、获取密钥和monmap

$ ceph auth get mon. -o /tmp/ceph.mon.keyring$ ceph mon getmap -o /tmp/ceph.mon.map

5、初始化Monitor

$ sudo -u ceph ceph-mon --mkfs -i mon2 --monmap /tmp/ceph.mon.map --keyring /tmp/ceph.mon.keyring

6、启动Monitor

$ systemctl start ceph-mon@mon2$ systemctl status ceph-mon@mon2● ceph-mon@mon2.service - Ceph cluster monitor daemonLoaded: loaded (/usr/lib/systemd/system/ceph-mon@.service; disabled; vendor preset: disabled)Active: active (running) since Mon 2019-03-04 11:31:37 CST; 8s agoMain PID: 1417 (ceph-mon)

7、配置Monitor开机自启

$ systemctl enable ceph-mon@mon2

8、以同样的方式,在mon3节点上部署Monitor

$ sudo -u ceph mkdir /var/lib/ceph/mon/ceph-mon3$ chown ceph.ceph /tmp/ceph.mon.keyring$ ceph auth get mon. -o /tmp/ceph.mon.keyring$ ceph mon getmap -o /tmp/ceph.mon.map$ sudo -u ceph ceph-mon --mkfs -i mon3 --monmap /tmp/ceph.mon.map --keyring /tmp/ceph.mon.keyring$ systemctl start ceph-mon@mon3$ systemctl enable ceph-mon@mon3

9、部署完成后把mon2,mon3节点添加到ceph的配置文件中,并将配置文件拷贝到其他节点

$ vim /etc/ceph/ceph.conf[global]fsid = b6f87f4d-c8f7-48c0-892e-71bc16cce7ffmon initial members = mon1,mon2,mon3mon host = 192.168.1.10,192.168.1.11,192.168.1.12public network = 192.168.1.0/24cluster network = 192.168.1.0/24$ scp /etc/ceph/ceph.conf mon2:/etc/ceph/ceph.conf$ scp /etc/ceph/ceph.conf mon3:/etc/ceph/ceph.conf

10、分别登录3个节点重启每个节点上的Monitor进程

$ systemctl restart ceph-mon@mon1$ systemctl restart ceph-mon@mon2$ systemctl restart ceph-mon@mon3

11、查看当前ceph集群状态

$ ceph -scluster:id: b6f87f4d-c8f7-48c0-892e-71bc16cce7ffhealth: HEALTH_OKservices:mon: 3 daemons, quorum mon1,mon2,mon3mgr: no daemons activeosd: 0 osds: 0 up, 0 indata:pools: 0 pools, 0 pgsobjects: 0 objects, 0Busage: 0B used, 0B / 0B availpgs:

OSD部署

1、使用sgdisk命令对磁盘进行分区,并格式数据分区

$ sgdisk -Z /dev/sdb$ sgdisk -n 2:0:+5G -c 2:"ceph journal" -t 2:45b0969e-9b03-4f30-b4c6-b4b80ceff106 /dev/sdb$ sgdisk -n 1:0:0 -c 1:"ceph data" -t 1:4fbd7e29-9d25-41b8-afd0-062c0ceff05d /dev/sdb$ mkfs.xfs -f -i size=2048 /dev/sdb1

2、创建OSD

$ ceph osd create0

3、创建数据目录,将数据分区挂载到数据目录下

$ mkdir /var/lib/ceph/osd/ceph-0$ mount /dev/sdb1 /var/lib/ceph/osd/ceph-0/

4、创建OSD的key

$ ceph-osd -i 0 --mkfs --mkkey

5、删除自动生产的journal文件

$ rm -rf /var/lib/ceph/osd/ceph-0/journal

6、查看journal分区的uuid

$ ll /dev/disk/by-partuuid/ | grep sdb2lrwxrwxrwx 1 root root 10 Mar 4 11:49 8bf1768a-5d64-4696-a04d-e0a929fa99ef -> ../../sdb2

7、根据查询结果创建journal分区的软链,把uuid写入文件,然后重新生成journal

$ ln -s /dev/disk/by-partuuid/8bf1768a-5d64-4696-a04d-e0a929fa99ef /var/lib/ceph/osd/ceph-0/journal$ echo 8bf1768a-5d64-4696-a04d-e0a929fa99ef > /var/lib/ceph/osd/ceph-0/journal_uuid$ ceph-osd -i 0 --mkjournal

8、添加osd的key,并添加权限

$ ceph auth add osd.0 mon 'allow profile osd' mgr 'allow profile osd' osd 'allow *' rgw 'allow *' -i /var/lib/ceph/osd/ceph-0/keyring

9、修改crushmap

$ ceph osd crush add osd.0 0.01459 host=mon1$ ceph osd crush move mon1 root=default

10、修改权限

$ chown -R ceph:ceph /var/lib/ceph/osd/ceph-0

11、激活OSD

$ ceph-disk activate --mark-init systemd --mount /dev/sdb1

以同样的方法添加其他的OSD,以这种方式写了一个脚本,可以快速完成其他OSD的部署

#!/bin/bashHOSTNAME=$(hostname)SYS_DISK=sdaDISK=$(lsblk | grep disk | awk '{print $1}')JOURNAL_TYPE=45b0969e-9b03-4f30-b4c6-b4b80ceff106DATA_TYPE=4fbd7e29-9d25-41b8-afd0-062c0ceff05dfunction Sgdisk() {sgdisk -n 2:0:+5G -c 2:"ceph journal" -t 2:$JOURNAL_TYPE /dev/$1 &> /dev/nullsgdisk -n 1:0:0 -c 1:"ceph data" -t 1:$DATA_TYPE /dev/$1 &> /dev/nullmkfs.xfs -f -i size=2048 /dev/${1}1 &> /dev/null}function Crushmap() {ceph osd crush add-bucket $1 host &> /dev/nullceph osd crush move $1 root=default &> /dev/null}function CreateOSD() {OSD_URL=/var/lib/ceph/osd/ceph-$1DATA_PARTITION=/dev/${i}1JOURNAL_UUID=$(ls -l /dev/disk/by-partuuid/ | grep ${i}2 | awk '{print $9}')mkdir -p $OSD_URLmount $DATA_PARTITION $OSD_URLceph-osd -i $1 --mkfs --mkkey &> /dev/nullrm -rf $OSD_URL/journalln -s /dev/disk/by-partuuid/$JOURNAL_UUID $OSD_URL/journalecho $JOURNAL_UUID > $OSD_URL/journal_uuidceph-osd -i $1 --mkjournal &> /dev/nullceph auth add osd.$1 mon 'allow profile osd' mgr 'allow profile osd' osd 'allow *' rgw 'allow *' -i $OSD_URL/keyring &> /dev/nullceph osd crush add osd.$1 0.01459 host=mon1 &> /dev/nullchown -R ceph:ceph $OSD_URLceph-disk activate --mark-init systemd --mount $DATA_PARTITION &> /dev/nullif [ $? =0 ] ; thenecho -e "\033[32mosd.$1 was created successfully\033[0m"fi}ceph osd tree | grep mon1 &> /dev/nullif [ $? != 0 ] ; thenCrushmap $HOSTNAMEfifor i in $DISK ; doblkid | grep ceph | grep $i &> /dev/nullif [ $? != 0 ] && [ $i != $SYS_DISK ] ; thenSgdisk $iID=$(ceph osd create)CreateOSD $IDelsecontinuefidone

Mgr部署

1、创建密钥

$ ceph auth get-or-create mgr.mon1 mon 'allow *' osd 'allow *'

2、创建数据目录

$ mkdir /var/lib/ceph/mgr/ceph-mon1

3、导出密钥

$ ceph auth get mgr.mon1 -o /var/lib/ceph/mgr/ceph-mon1/keyring

4、启动mgr

$ systemctl enable ceph-mgr@mon1$ systemctl start ceph-mgr@mon1

以同样的方式在其他节点添加mgr 5、开启dashboard模块

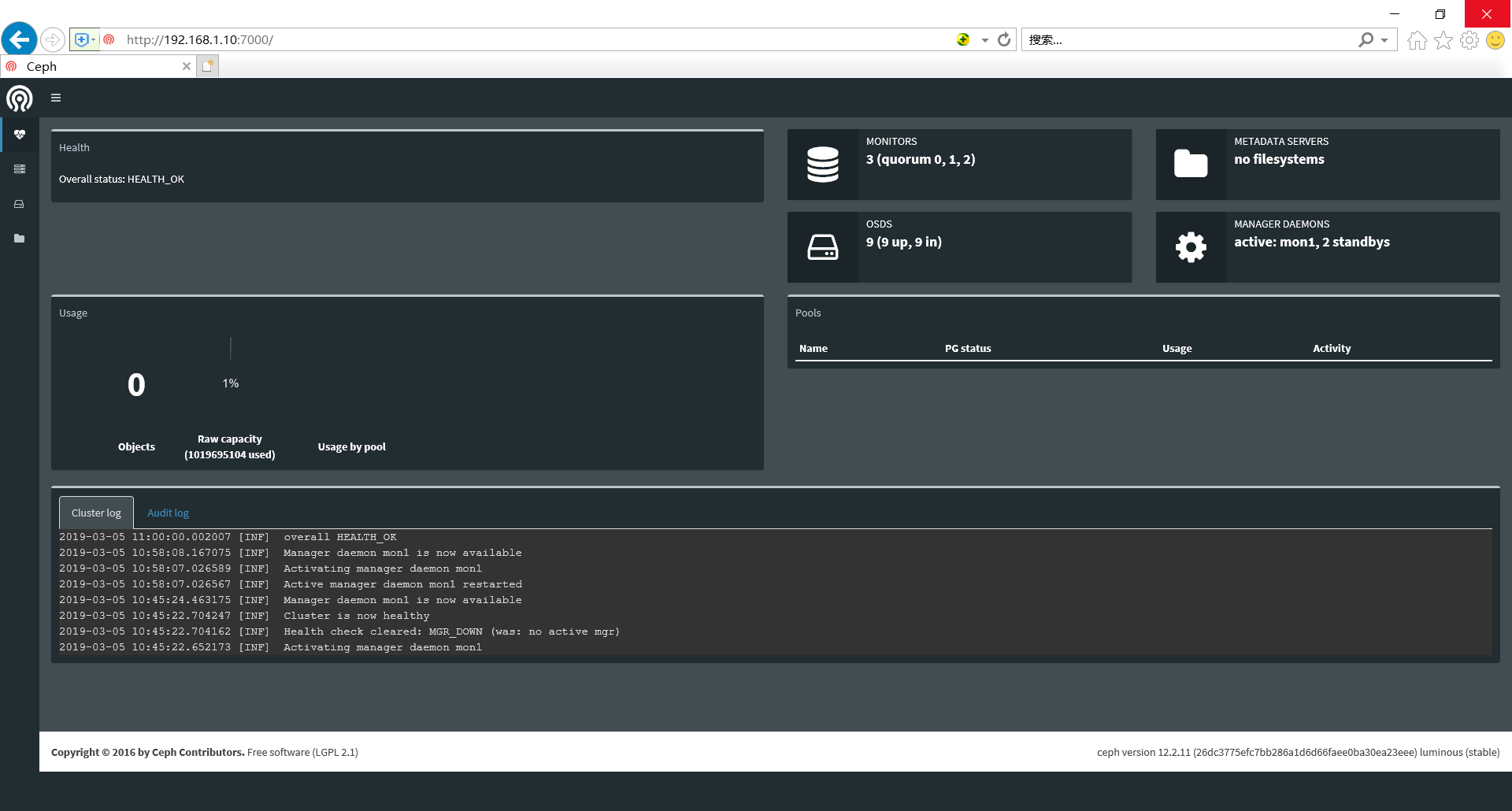

$ ceph mgr module enable dashboard$ ceph mgr services{"dashboard": "http://mon1:7000/"}

6、浏览器访问dashborad

RGW部署

1、安装软件包

$ yum -y install ceph-radosgw

2、创建key

$ sudo ceph-authtool --create-keyring /etc/ceph/ceph.client.radosgw.keyring

3、修改key的权限

$ sudo chown ceph:ceph /etc/ceph/ceph.client.radosgw.keyring

4、创建rgw用户和key

$ sudo ceph-authtool /etc/ceph/ceph.client.radosgw.keyring -n client.rgw.mon1 --gen-key

5、为用户添加权限

$ sudo ceph-authtool -n client.rgw.mon1 --cap osd 'allow rwx' --cap mon 'allow rwx' --cap mgr 'allow rwx' /etc/ceph/ceph.client.radosgw.keyring

6、导入key

$ sudo ceph -k /etc/ceph/ceph.client.admin.keyring auth add client.rgw.mon1 -i /etc/ceph/ceph.client.radosgw.keyring

7、配置文件中添加rgw配置

$ vim /etc/ceph/ceph.conf[client.rgw.mon1]host=mon1keyring=/etc/ceph/ceph.client.radosgw.keyringrgw_frontends = civetweb port=8080

8、启动rgw

$ systemctl enable ceph-radosgw@rgw.mon1$ systemctl start ceph-radosgw@rgw.mon1

9、查看端口监听状态

$ netstat -antpu | grep 8080tcp 0 0 0.0.0.0:8080 0.0.0.0:* LISTEN 7989/radosgw

以同样的方式在其他节点部署rgw,部署完成后,使用ceph -s查看当前集群状态

$ ceph -scluster:id: b6f87f4d-c8f7-48c0-892e-71bc16cce7ffhealth: HEALTH_WARNtoo few PGs per OSD (10 < min 30)services:mon: 3 daemons, quorum mon1,mon2,mon3mgr: mon1(active), standbys: mon2, mon3osd: 9 osds: 9 up, 9 inrgw: 3 daemons activedata:pools: 4 pools, 32 pgsobjects: 187 objects, 1.09KiBusage: 976MiB used, 134GiB / 135GiB availpgs: 32 active+clean

配置s3cmd

1、安装软件包

$ yum -y install s3cmd

2、创建s3用户

$ radosgw-admin user create --uid=admin --access-key=123456 --secret-key=123456 --display-name=admin{"user_id": "admin","display_name": "admin","email": "","suspended": 0,"max_buckets": 1000,"auid": 0,"subusers": [],"keys": [{"user": "admin","access_key": "123456","secret_key": "123456"}],"swift_keys": [],"caps": [],"op_mask": "read, write, delete","default_placement": "","placement_tags": [],"bucket_quota": {"enabled": false,"check_on_raw": false,"max_size": -1,"max_size_kb": 0,"max_objects": -1},"user_quota": {"enabled": false,"check_on_raw": false,"max_size": -1,"max_size_kb": 0,"max_objects": -1},"temp_url_keys": [],"type": "rgw"}

3、生成s3配置文件

$ vim .s3cfg[default]access_key = 123456bucket_location = UScloudfront_host = 192.168.1.10:8080cloudfront_resource = /2010-07-15/distributiondefault_mime_type = binary/octet-streamdelete_removed = Falsedry_run = Falseencoding = UTF-8encrypt = Falsefollow_symlinks = Falseforce = Falseget_continue = Falsegpg_command = /usr/bin/gpggpg_decrypt = %(gpg_command)s -d --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)sgpg_encrypt = %(gpg_command)s -c --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)sgpg_passphrase =guess_mime_type = Truehost_base = 192.168.1.10:8080host_bucket = 192.168.1.10:8080/%(bucket)human_readable_sizes = Falselist_md5 = Falselog_target_prefix =preserve_attrs = Trueprogress_meter = Trueproxy_host =proxy_port = 0recursive = Falserecv_chunk = 4096reduced_redundancy = Falsesecret_key = 123456send_chunk = 96simpledb_host = sdb.amazonaws.comskip_existing = Falsesocket_timeout = 300urlencoding_mode = normaluse_https = Falseverbosity = WARNINGsignature_v2 = True

4、修改ceph.conf,在global中添加pg_num和pgp_num配置

$ vim /etc/ceph/ceph.confosd_pool_default_pg_num = 64osd_pool_default_pgp_num = 64

5、重启进程,使配置生效

$ systemctl restart ceph-mon@mon1

6、创建bucket,并测试上传文件

$ s3cmd mb s3://testBucket 's3://test/' created$ s3cmd put osd.sh s3://testupload: 'osd.sh' -> 's3://test/osd.sh' [1 of 1]1684 of 1684 100% in 1s 1327.43 B/s done

7、再次查看集群状态

$ ceph -scluster:id: b6f87f4d-c8f7-48c0-892e-71bc16cce7ffhealth: HEALTH_OKservices:mon: 3 daemons, quorum mon1,mon2,mon3mgr: mon1(active), standbys: mon2, mon3osd: 9 osds: 9 up, 9 inrgw: 3 daemons activedata:pools: 6 pools, 160 pgsobjects: 194 objects, 3.39KiBusage: 979MiB used, 134GiB / 135GiB availpgs: 160 active+clean